Basic-inspired language interpreter

As part of my journey to delve deeper into the mechanics of programming languages and their interpretation, I decided to build a custom interpreter for a language inspired by BASIC. The goal was to create an interactive, robust, and extensible interpreter capable of parsing, tokenizing, and executing code with minimal overhead, while providing clear error reporting and debugging capabilities. Here's a breakdown of the key design decisions and how they guided the development of the interpreter.

Technologies :

Python

High-Level Design and Key Components

The interpreter was designed to be modular, following the classic structure of a language interpreter: tokenization, parsing, execution, and error handling. I focused on clarity and maintainability, making it easy to add new features and enhance functionality in the future. Here’s how each component was implemented.

Tokenization: The Lexer

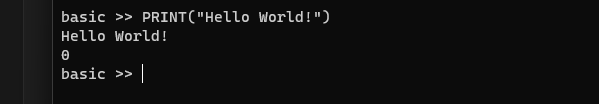

The first step in processing the input source code is tokenization. To achieve this, I created a lexer that reads a sequence of characters and converts them into manageable tokens. Each token represents a meaningful element in the language, such as keywords, operators, literals, or identifiers.

Key Decisions:

- Position Tracking: Using a Position class, the lexer tracks the position of each character in the input (line, column, and index). This proved invaluable when debugging and reporting errors, allowing for precise pinpointing of problems in the source code.

- Token Types: The lexer defines token types (e.g., TT_INT, TT_FLOAT, TT_STRING) and keywords like VAR, IF, FOR, ensuring accurate representation of language constructs. Each token also captures its value and position, making it easier to handle runtime errors later in the interpreter.

- Error Handling: During tokenization, I integrated robust error handling to catch illegal characters and unexpected syntax. For instance, if the lexer encounters an unrecognized character, it raises an IllegalCharError, ensuring that invalid inputs are promptly rejected before reaching later stages of interpretation.

- Whitespace & Comments: The lexer efficiently handles comments and whitespace, skipping over them without affecting the parsing process, ensuring a clean token stream for the parser to work with.

Parsing: Building the Abstract Syntax Tree (AST)

Once the lexer produces a list of tokens, the next step is parsing them into an Abstract Syntax Tree (AST). The parser processes tokens sequentially, transforming them into structured nodes that represent the language's syntax. Each node type corresponds to a specific language construct, such as expressions, variables, and control flow statements.

Key Decisions:

- Recursive Descent Parsing: I implemented a recursive descent parser, which breaks down expressions, control structures, and statements using a set of mutually-recursive methods. This method is simple and intuitive, making it easy to add new syntax rules if necessary.

- Modularity: Each component of the language (e.g., expressions, statements, loops) has its own parsing function. This modular approach allowed me to keep the codebase clean and focused, making maintenance and debugging more manageable.

- Error Handling: The parser uses custom error classes like ExpectedCharError and InvalidSyntaxError to catch mistakes in syntax or mismatched tokens. This ensures that invalid syntax is caught early in the parsing stage, preventing complex errors from bubbling up to the runtime.

Execution: Interpreting the AST

After parsing, the interpreter executes the AST, where each node is processed to perform the desired operation. The interpreter follows a visitor pattern, where specific methods handle different node types (e.g., visit_BinOpNode for binary operations, visit_VarAccessNode for variable access, etc.)

Key Decisions:

- Runtime Environment: The interpreter maintains a symbol table to store variable values. It uses contexts to manage scopes, ensuring that variables are correctly resolved in the appropriate scope (e.g., local vs. global).

- Value Class: A key design decision was the creation of a Value class that serves as the base class for all types of values in the interpreter (e.g., Number, String, List). This allows for polymorphism, where operations on different types (like arithmetic operations or comparisons) can be handled uniformly through the Value interface.

- Control Flow Handling: The interpreter supports control flow through if, for, while loops, and function calls. Each control flow construct (like visit_IfNode or visit_WhileNode) processes the associated node, executing the appropriate block of code when conditions are met. Special control flow nodes like ReturnNode, ContinueNode, and BreakNode manage the flow of execution and ensure that statements like return, continue, and break work as expected.

Error Handling: Clear and Descriptive Reporting

Error handling was a critical aspect of the interpreter’s design, ensuring that users are given clear, actionable feedback when things go wrong. I created a custom error hierarchy with classes like IllegalCharError, ExpectedCharError, InvalidSyntaxError, and RTError to handle different types of failures.

Key Decisions:

- Tracebacks: Using methods like generate_traceback() and string_with_arrows(), the interpreter provides detailed error messages that include the line and column where the error occurred, along with a visual representation of the problematic line. This makes debugging significantly easier for users.

- Runtime Errors: At runtime, the interpreter raises RTError for issues like dividing by zero, accessing undefined variables, or attempting invalid operations on incompatible data types. This ensures that users are alerted immediately when their code produces an error during execution.

Built-In Functions: Extending the Language

To make the language more practical, I included a set of built-in functions that users can leverage. These include utility functions like print(), input(), and clear(), as well as type-checking functions like is_number() and is_string().

Key Decisions:

- BaseFunction Class: I created a BaseFunction class that serves as the parent for both user-defined and built-in functions. This class manages function execution contexts and argument validation.

- Built-In Functions: The BuiltInFunction class is responsible for implementing common functions, ensuring that they can be used directly within the language without needing to define them by hand. This allows for greater expressiveness and ease of use in the language.

The Interpreter App

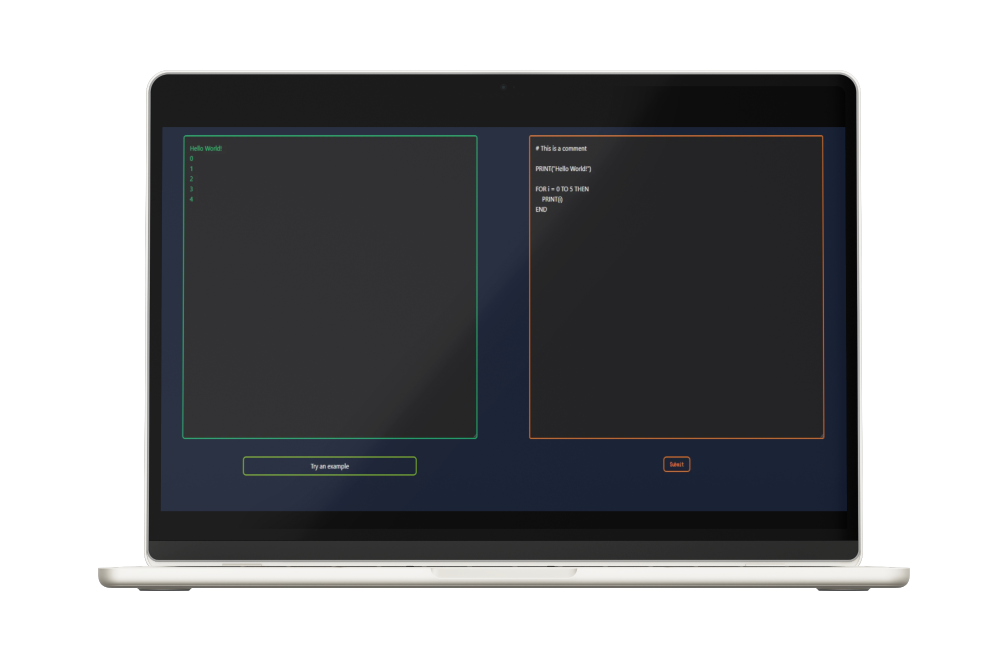

This project is a full-stack application combining a FastAPI-based backend and a React-based frontend to create a seamless code execution platform. The application enables users to submit and execute code dynamically by typing snippets directly into a text area. Designed for both efficiency and user-friendliness, the backend leverages FastAPI’s asynchronous capabilities, while the frontend provides an interface for interaction.

Key Features

Code Execution from File Upload

- A dedicated endpoint in the FastAPI backend accepts POST requests with a file upload containing code.

- Processes the uploaded file, executes the code, and returns the output or detailed error feedback.

Dynamic Code Submission via Frontend

- The React-based frontend allows users to input code snippets directly into a text area.

- Facilitates real-time communication with the backend, displaying execution results instantly.

CORS Middleware for Seamless Integration

- The FastAPI backend includes CORS middleware to enable secure and smooth interaction with the frontend.

User-Friendly Interface

- The React frontend offers an intuitive and elegant design, ensuring an optimal user experience for code submission and result viewing.

Scalable and Efficient Architecture

- By combining FastAPI’s asynchronous capabilities with React’s dynamic UI features, the application achieves a balance between performance and scalability, making it suitable for various development and educational purposes.

Future Enhancements and Scalability

The design of this interpreter is intentionally modular, allowing for easy extension and improvement. In the future, I plan to:

- Add support for more complex data structures, like dictionaries and sets.

- Enhance error handling with more specific cases for different types of runtime issues.

- Implement more advanced language features, such as first-class functions, closures, and advanced control flow mechanisms.

© 2025 Thamini Perera. All rights reserved.